Enforcing the Use of AI in Engineering Teams - Good or Bad Thing?

I am answering the question above + sharing the key challenges with AI adoption and my suggestions on how to approach it!

DevStats (sponsored)

I am happy to welcome back DevStats as a sponsor. They were already a long-term sponsor last year and I am happy to have them back again.

Phil Alves, founder of DevStats is an avid reader of this newsletter and I am also a fan of what they are building.

DevStats highlights bottlenecks, burnout risks, and delivery delays in real-time - so you can make continuous process improvements.

Visualize your sprints and focus on mission-critical work

Deploy faster with a clear view of flow metrics

Spot bottlenecks and risks earlier

Improve developer experience

I’ve checked DevStats thoroughly and I used it in one of my projects as well, so I highly recommend checking them out, if you are looking for such tool.

Let’s get back to this week’s thought.

Intro

Most engineers are using AI tools, but they don’t necessarily trust them.

From StackOverflow’s survey done in 2024, we can already see that most engineers are using AI tools. 76% have answered that they are using or planning to use AI coding tools but they don’t necessarily trust them.

And I believe that at this time in April 2025, this percentage has just increased based on my conversations with many engineers and engineering leaders in the past month.

Especially with the increase in popularity of Cursor, it’s becoming very useful to speed up repetitive workflows and providing helpful suggestions when coding as many engineering leaders have reported.

Also, Model Context Protocol (MCP) is a very exciting technology that allows AI applications to connect with external tools, data sources and systems, which will also help a lot with overall productivity in my opinion.

There have been claims like:

- no more developer hiring,

- AI replacing mid-level engineers and recently,

- AI increasing 100x the output and enforcing the usage of AI for everyone in the organization.

I have already responded to the claims of mid-level engineers being replaced by AI in 2025.

In today’s article, we'll focus on the enforcing part in engineering teams.

Is enforcing the use of AI the right way to go in engineering teams? I am answering this question in the article.

You can also expect to read the key challenges with AI adoption and my suggestions on how to approach the AI adoption process in engineering teams.

Let’s get straight into it!

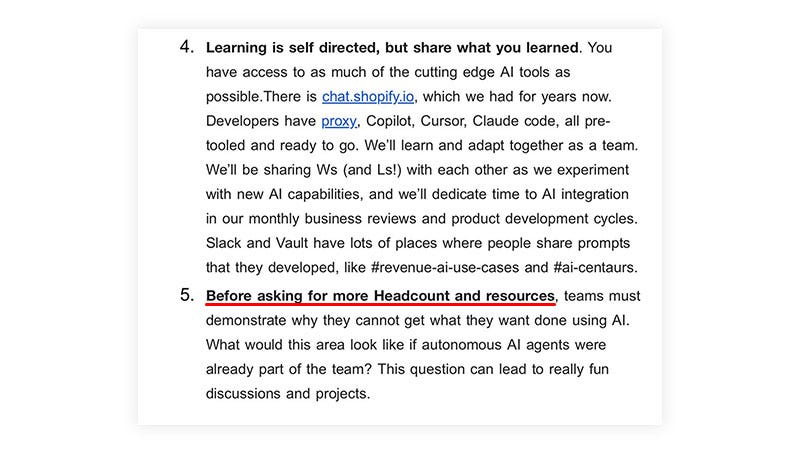

There has recently been shared publicly an internal memo by Shopify’s CEO Tobias Lütke

It has been quite an interesting read to see how companies like Shopify approach AI adoption across their teams. You can read the full memo here.

To be fair, I agree with a lot of the points mentioned and the use of AI provides a good productivity increase if used the right way.

Also the intention I believe is the right one → to inspire people to use AI in order to be productive and provide more value to the business.

But, there are nuances in there, that if not approached the right way, can be a downside for the culture and can inspire wrong behavior which can lead people to trying to game the system.

I’ll break down these nuances in the next sections.

AI definitely improves productivity, but 100X? That’s not true for engineering teams

As I have spoken to many different engineering leaders over the past month, I've heard about 5x productivity increase max. In most cases it's been from 0.3 - 1x increase.

Especially working with larger, more complex systems, AI doesn't help as much at this time.

It's great for prototyping and creating boilerplate or quick and simple projects as I did with the Engineering Leadership Endless Runner Game.

But everyone that has worked with larger more complex systems knows how important it is not to just blindly rely on AI to do things at this time.

You can read the insights from 11 engineering leaders on how they or their teams are using AI to increase Software Development productivity here: How to use AI to increase Software Development productivity (paid article).

Including AI usage as a KPI in the performance review

I’ve heard of specific companies thinking about doing leaderboards of who is using the most LLM credits or who has commited the most AI generated code to the codebase.

It’s really important to understand that:

Measuring the wrong things inspires people to try to game the system.

So, if you purely just measure the output and usage, people will naturally be prone to use that as much as possible, which would provide the wrong results for the business.

And it can also inspire pure individualism, which you don’t want in your organization.

These are the main things I value:

Team productivity > Developer productivity

Helping others > Completing your own tasks

Building the RIGHT things > Amount of things being built

Using AI alone doesn’t mean much if you don’t provide value to the business or you don’t share your knowledge with others and your team is not working well together.

So, if you want to track AI usage, my advice is to map it to business results and how much the individual effort involves helping others and making the team and overall org more productive.

Demonstrating that you can’t do what is needed with AI before asking for more Headcount

This framing makes sense from the business point of view, but from the people perspective it can be perceived differently.

There is a lot of fear from engineers being replaced by AI already and as proven many times, teams don’t operate well without psychological safety.

Of course, some form of pressure is fine, but one of the key ingredients of high-performing teams is that people are safe to make mistakes and learn from them, speak up and ask questions.

And this message can be interpreted in a way: “We wish to increase the usage of AI so we don’t need to hire new people and potentialy remove some of the existing ones as well”.

This hasn’t been said, but would be a logical step as we’ve seen many layoffs happening across the industry.

And as said above, this makes sense from the business perspective. Decrease the cost, while delivering more value, but may negatively impact the culture.

I really like Nvidia’s approach and the way they put people first. Which in the long-run provides great results for them.

Is enforcing AI usage and checking their adoption in different ways the way to go?

Companies should definitely look for ways to include AI in all different levels as it helps a lot with productivity and will only get better over time.

But top-down direction and enforcing the usage may backfire if not realized correctly and having people in mind. Especially if the expectations regarding the AI productivity increase are unrealistic.

People may try to fake AI usage just to get “high scores” in performance reviews.

And also low psychological safety may result in people being reluctant to share knowledge, help each other, challenge assumptions and be motivated to keep getting better.

These are the things that need to be mitigated with an enforcing approach.