How HelloBetter Designed Their Interview Process Against AI Cheating

With the new process, they improved their time to hire, minimized the risk of AI cheating and focused more on practical programming fundamentals!

Intro

There is a rise in AI-assisted tooling to help with job submissions and interviews.

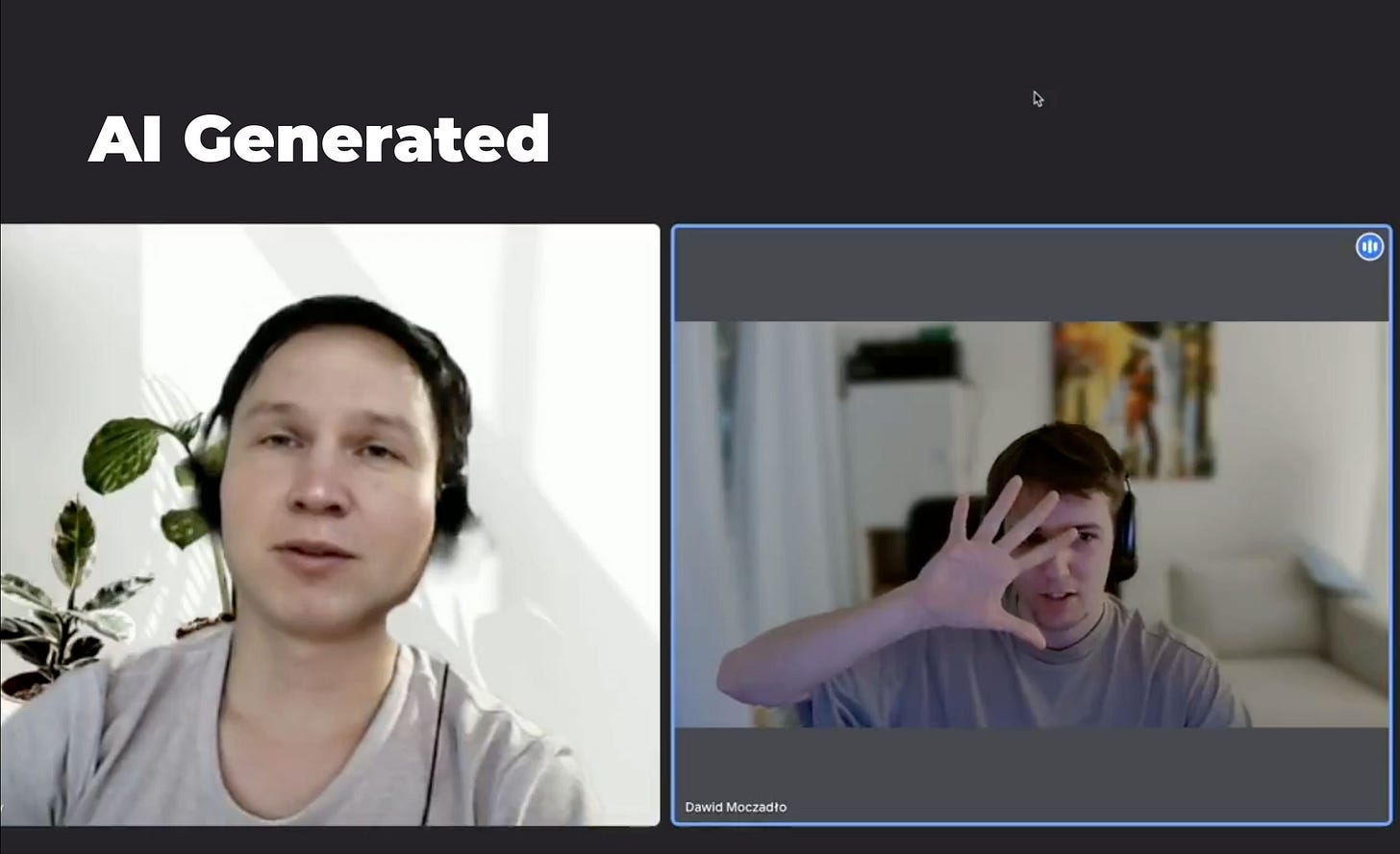

Here is an example, posted by Dawid Moczadło not so long ago, where the interviewee actually changed their appearance with AI and responded to questions with answers generated by ChatGPT.

Dawid also mentioned that he could feel the GPT-4 bullet point-style responses.

The way he exposed the candidate was by asking them to take their hand and put it in front of their face, which would likely remove the AI filter. The candidate refused to do that and he ended the interview shortly after.

Such “attempts” are going to just be more common in my opinion and it’s important to design your interview process in a way that will minimize the success of such acts.

To help us with this, I am happy to have Anna J McDougall, Software Engineering Manager at HelloBetter, as a guest author for today’s newsletter article.

She is sharing with us the process she has led on re-structuring their interview process against AI cheating.

Let’s hand it over to Anna!

1. A Fairer, More Modern Approach to Technical Interviews

As someone obsessed with tech careers, business growth, and engineering management, I am constantly coming up against the boogeyman of technical interviews.

In many ways, tech companies are stuck in the thinking of 20–30 years ago: whiteboards, algorithms, logic puzzles, and tasks that revolved around an in-person interview space.

We’re in a new era, where 99% of engineering interviews are done virtually and the candidate’s workspace is uncontrollable by the interviewing team.

We need to adapt to this, not by simply porting previous methods across to an online platform, but by fundamentally rethinking the skills we expect 21st-century software engineers to have.

Naturally, opinions about what is wrong with technical interviews are prevalent both from employers and (potential) employees. For example, leetcode-style assessments have long been derided as being a bad test of abilities.

“Instead,” opponents argue, “you should use take-home tests or projects that the candidate can explain back to you”.

Ah, but you see, not everyone can afford to let their evenings and weekends disappear into these take-home tests or side projects.

Due to this inconveniently linear construct called “time”, more experienced engineers start having or caring for families, adopting pets, or (lord forbid) doing sports and hobbies. It’s also no big secret that the majority of housework and care duties still tend to be shouldered by women.

To put your best foot forward as a candidate, you will create the best possible solution, no matter how many hiring managers tell you to “only spend 2–3 hours on a solution”. Therefore, take-home or “own project” technical interviews tend to put older candidates and women candidates at a disadvantage.

One other problem popping up nowadays is the prevalence of AI tools for engineers to pass technical interviews, ranging from manually entering prompts into ChatGPT (now a bit old-school), all the way through to having someone else take the technical round for you and have your face artificially plastered onto theirs.

Going back to take-home tests for a moment, these are all but obsolete in the age of AI, in large part because all the solutions seem to be eerily similar to each other… 👀

So what do you do when…

Leetcode tests aren’t a good indication of the work itself.

Take-homes will favour those with more free time.

Asking freestyle technical questions lends itself to cheating with AI.

Here, I share our own journey at Berlin mental health DTx startup HelloBetter in transforming our technical interview round into a solution that not only mirrors the real work, but also doesn’t require an unreasonable amount of candidate time.

I don’t believe there is a perfect solution yet to the challenge of assessing technical skill in an engineering candidate, however, I do think this is the best solution I know of so far in terms of balancing realistic expectations, useful insights, and equitable testing conditions.

Background

In November 2024, we began overseeing changes to our hiring process by first defining what our current issues were.

Most of these will probably seem familiar to you:

An interview process with too many steps.

Long time-to-hire.

Not discovering crucial information about a candidate until late in the process (e.g. they want to move vertically or horizontally within a year)

Candidates using AI to cheat or misrepresent their abilities.

A technical interview round that feels more like a memory test.

Engineers’ time being taken up by interviewing candidates the hiring manager doesn’t want

Engineers who are confused when they recommend against a candidate in an interview round, but that candidate gets hired anyway, or vice versa.

Non-Technical Hiring Changes

Firstly, let’s look at the solutions we implemented that aren’t related to the technical interview:

Introduced a short questionnaire in the application form to screen out some common misalignments on goals, salary, etc. (Though it should be noted that all HelloBetter IC salary bands are publicly available)

Moved the technical round after the hiring manager round.

Combined our two final, half-hour interview rounds — the “Team Interview” and the “Founder Interview” — into one hour-long slot, to reduce delays from scheduling.

I offered more of my time and willingness, for example, to interview multiple candidates per day or back-to-back.

For our most recent hire, I made a point to stay in close contact with our recruiter, sharing at least 1–2 Slack messages every day to stay on top of the process, candidates, and next steps. We further had a half-hour sync meeting every week.

Most of the changes above were done in a collaboration between the Engineering Managers and Recruitment. I volunteered to take on the challenge of defining changes to the technical interview, in large part because I had a clear vision of how it could be done better.

We further had a half-hour sync meeting every week.

Most of the changes above were made in collaboration between the Engineering Managers and Recruitment. I volunteered to take on the challenge of defining changes to the technical interview, in large part because I had a clear vision of how it could be done better.

Technical Interview Round: Workshop Phase

I wanted to ensure this was something involving all of engineering. Since the engineers are the ones conducting the technical interview round, it made sense to have them drive the ideation and narrowing of the precise structure, based on my criteria.

In Slack, I asked for volunteers to assist me, and got 6 takers. By chance, they managed to neatly divide into two engineers each for mobile, web frontend, and backend.

The engineers in question were Julia Ero, Mohamed Gaber Elmoazin, Andrei Lapin, Caio Quinta, Euclides dos Reis Silva Junior, and Chaythanya Sivakumar.

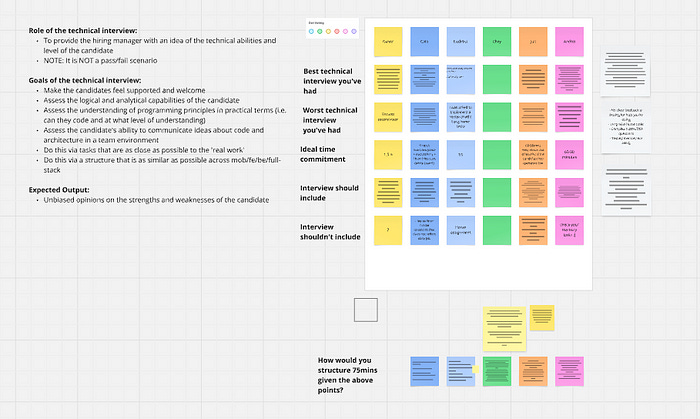

I set up a Miro board and defined the goals/guidelines for what we wanted the technical round to achieve. In two sessions of about 1 hour each, I guided the engineers through questions and discussions about their own experiences both as candidates and as interviewers.

As you can see in the image, I provided a few guiding principles:

The goal of the interview is to provide the hiring manager with an idea of the technical abilities and level of the candidate, not to pass or fail that candidate.

Create an interview structure that can work across disciplines (i.e. backend and frontend interviews shouldn’t be wildly different)

The candidate should feel supported and welcome.

The process should be as close as possible to the actual work they will do, rather than on purely abstract concepts.

The end result should allow us to assess the candidate’s analytical capabilities, programming ability, understanding of programming principles, and “code communication” skills.

In the first session, we covered the engineers’ own experiences. The goal here was to ground their ideas in lived experience and to ensure that their framing began from the candidates’ perspective.

Each person was given their own post-it to fill out, and after each section, I would summarise what points seemed to be most common or agreed-upon.

From there, we devised the basic structure with a timeline of approximately 75–90 minutes total.

2. Introducing the McDougall Method

In this 90-minute interview structure, we assess the abilities of the candidate in a real-world scenario, which prioritises a fair process for the candidate with the pragmatic reality of a technical assessment.

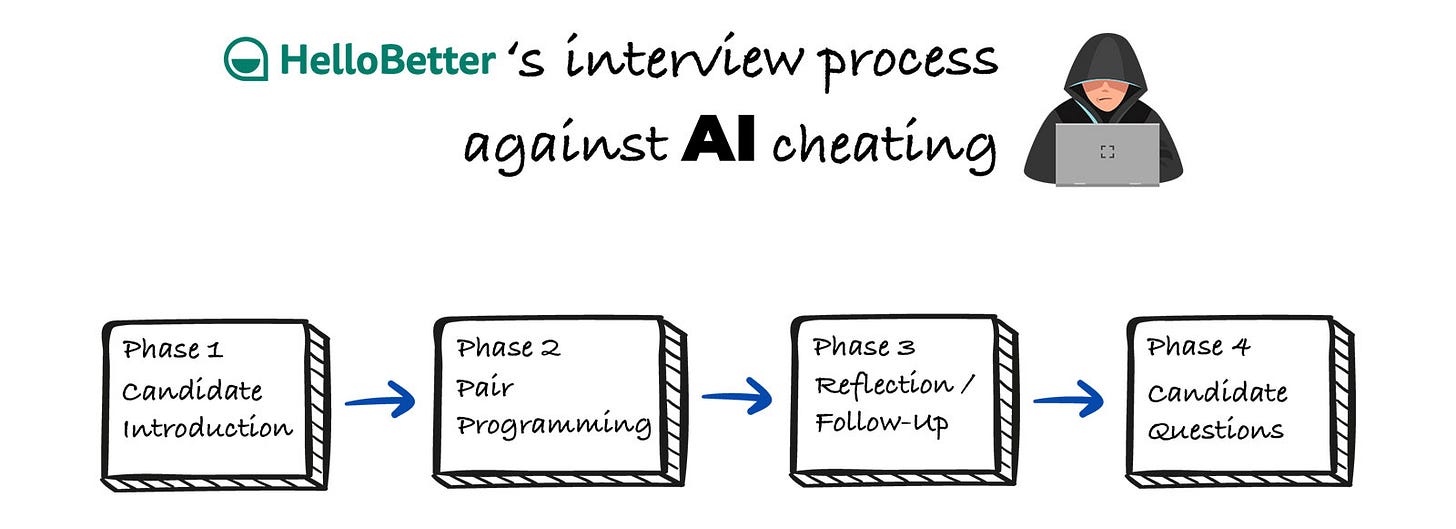

The structure can be broken down into four phases:

Introduction to the candidate and a brief overview of their technical history (10–15 minutes)

Pair programming session (30–45 minutes)

Reflection/follow-up questions (15 minutes)

Candidate questions and wrap-up (10 minutes)

Separating each of these stages out, we had a combined brainstorming of what questions we could ask for each stage, and for Step 2 (Pair Programming), how we could best achieve the goals laid out above.

Each of these phases is important and serves a crucial purpose. Let’s look at each in turn.

A quick note on naming…

The term “McDougall Method” is my own label for the framework I led, developed and documented to address these technical interview challenges. I chose the name it’s more convenient than saying “our new technical interview method” or “HelloBetter’s updated technical interview round structure” on repeat.

However, like most things EMs take credit for, it was not actually a solo effort, and is thanks to the input of the six engineers mentioned above, as well as thanks to HelloBetter CTO Amit Gupta, and fellow EMs Leonardo Couto and Garance Vallat — who provided me with the freedom to drive this initiative.

Phase 1: Candidate Introduction and Technical Background

This phase is pretty common, but nevertheless, it’s important to understand why this phase exists in most technical interviews, rather than jumping straight into a coding exercise or a quiz. This stage only includes two engineers, to avoid the candidate being overwhelmed but also to avoid any potential individual bias playing too big of a role.

In short, we want the candidates to feel as relaxed as possible. We will never have fully relaxed candidates in this situation, but if there’s any way for us to reduce stress, we know that will lead to better performance. The brain does not operate at its best when it’s in fight-or-flight mode.

Here, we smile, introduce ourselves, make a bit of small talk about our roles at the company and maybe even a fun fact about ourselves.

We then give the candidate an opportunity to introduce themselves with the same basic questions they face in most interview stages, which should provide some ‘easy wins’ for them:

Tell us about yourself

Tell us about your recent work history

What excites you about the role?

…and so on.

We explicitly don’t want this stage to go on too long, again because of stress.

Every candidate enters the technical round knowing they will be assessed, so too much small talk is delaying the part they fear the most.

As such, we want enough introductory conversation to ‘grease the wheel’, but not so much that they start getting jittery about when the hard part will actually start. For us, we set this limit at about 10–15 minutes.