How to Use AI to Improve Teamwork in Engineering Teams

Great teams build great software, not individuals. This is how we can improve teamwork using AI!

This week’s newsletter is sponsored by DX.

If you’re looking to measure the impact of AI tools → the DX AI Measurement Framework includes AI-specific metrics to enable organizations to track AI adoption, measure impact, and make smarter investments.

When combined with the DX Core 4, which measures overall engineering productivity, this framework provides leaders with deep insight into how AI is providing value to their developers and the impact AI is having on organizational performance.

Thanks to DX for sponsoring this newsletter, let’s get back to this week’s thought!

Intro

I’ve seen many failed projects not because of bad tech, but because of bad communication and teamwork.

Conway’s law is very real:

Organizations that design systems are constrained to produce designs that are copies of the communication structures of these organizations.

But many organizations are focusing on improving individual performance, instead of teamwork. Especially when it comes to AI.

And I believe the reason is: Not a lot of resources on how we can leverage AI to improve teamwork.

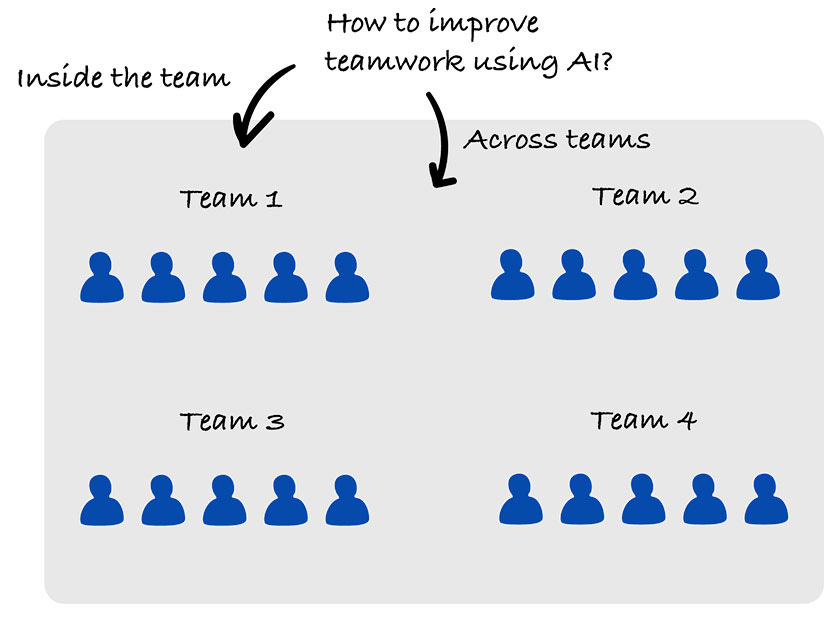

So, for today’s article, this is going to be our main question that we'll want to answer:

How can we leverage AI to improve teamwork, especially in engineering organizations and teams?

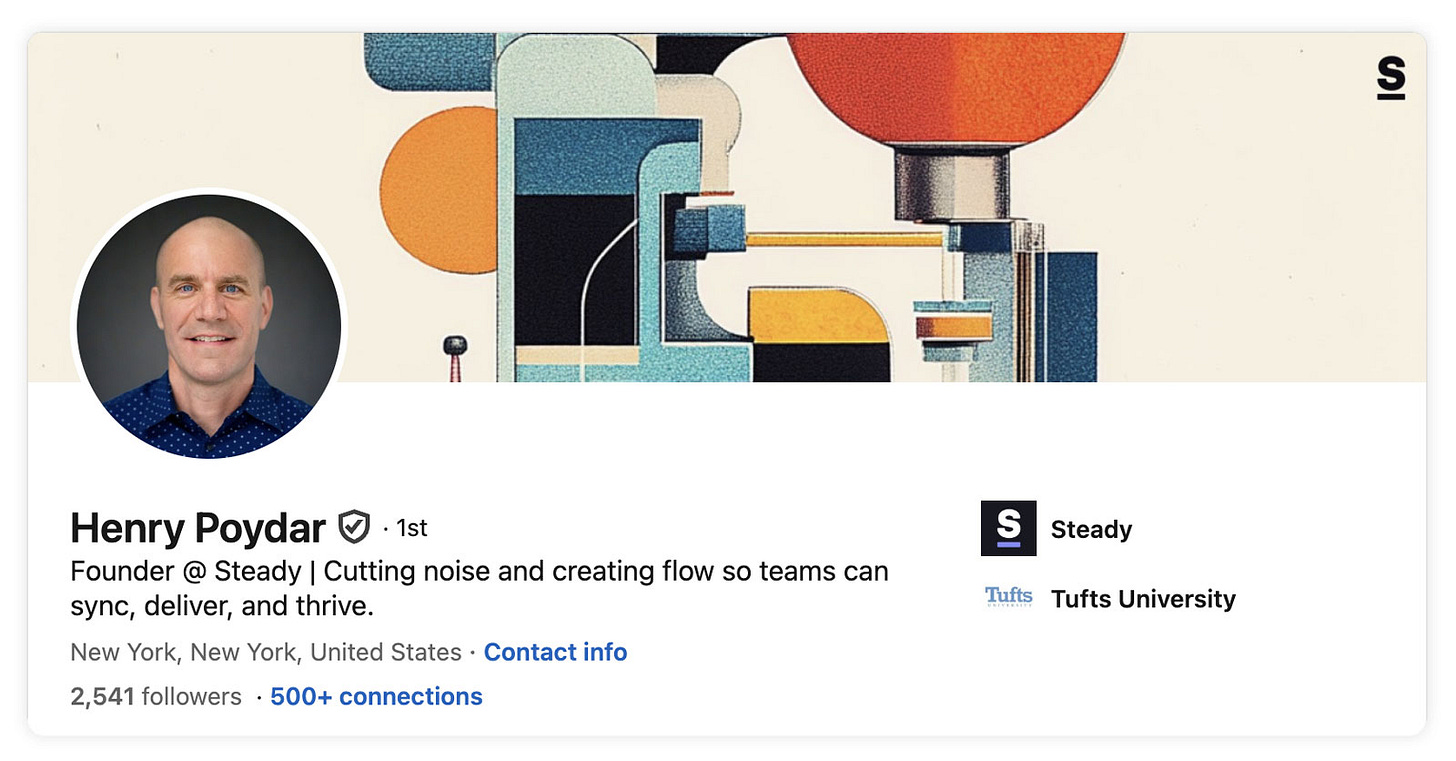

To help us with this, we have Henry Poydar with us today, who has been working closely on this exact topic with his company Steady for close to 2 years now!

P.S. We’ve met with Henry at the University of Maryland earlier this year, where we talked about how we can improve coordination in engineering teams.

You can read all about the event and what we talked about here:

Introducing Henry Poydar

Henry Poydar is Founder and CEO at Steady, with nearly 25 years of experience in software engineering and leadership → leading product and engineering teams.

For nearly 2 years, he has been closely working on how to utilize AI to improve teamwork within teams and across teams. Today, he’s kindly sharing his insights with us!

Everyone’s talking about AI for tackling individual workflows. But what about teamwork?

GenAI has changed how we write code. But it hasn’t yet changed how we work together as engineers.

What if we could take the benefits of AI code assistants, assembling context, surfacing decision points, and removing boilerplate, and apply them to teamwork itself?

In this article, I’ll offer an approach to do just that. But first, let’s ground ourselves in a few first principles.

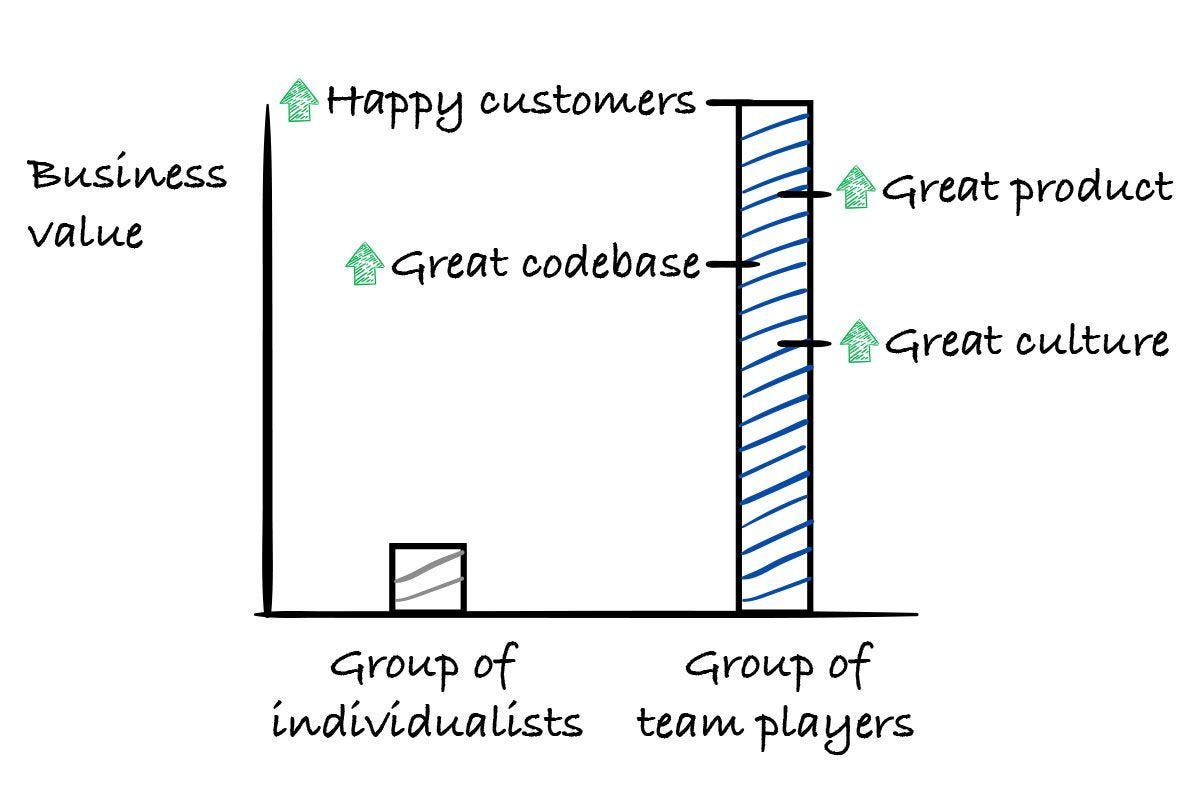

There is no greater technology than a team of humans

We are fundamentally social creatures. Humans literally cannot survive alone, we die without connection, collaboration, and shared purpose. These are biological facts, not philosophical conjectures.

Throughout history, our greatest achievements have come not from individual genius, but from coordinated human effort.

The Apollo program wasn't just staffed with brilliant engineers, it was about 400,000 people working in perfect sync toward a shared vision.

Linux didn't emerge from Linus Torvalds alone, but from thousands of contributors building on each other's work across decades.

Even today's AI revolution proves this point. OpenAI and Meta aren't using enormous salaries to lure in the best minds so they can work on their own, they pay them to join the team.

And the most valuable AI startups aren't built around individual contributors working in isolation, but around tight-knit groups who can move fast, think together, and coordinate complex technical decisions at speed.

The ancient Greeks had a word for this: synergia.

The idea that the combined effect of a group working together exceeds the sum of their individual efforts. Modern science backs this up: diverse teams consistently outperform even the most talented individuals when tackling complex problems.

The three ingredients of effective teamwork

So what makes a team truly effective?

Over my 25 years working with high-performing engineering teams (plus data and insights from modern management science, like Google's Project Aristotle), three key ingredients show up again and again:

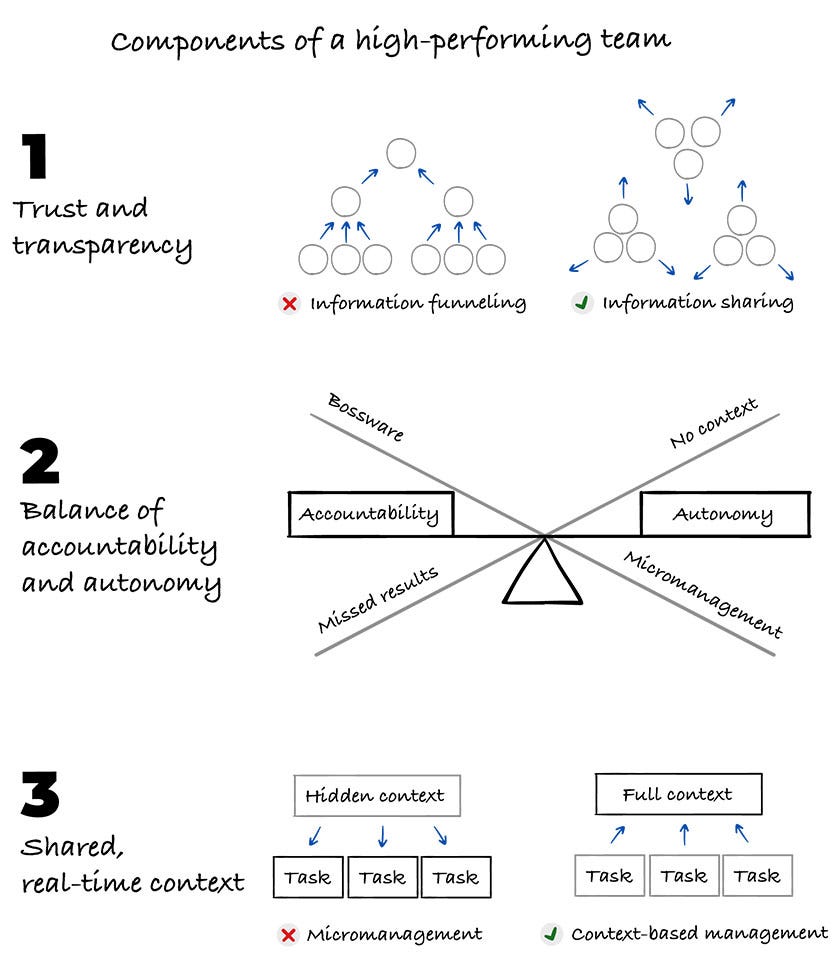

Trust and transparency: confidence in each other through clear communication

A balance of accountability and autonomy: zero micromanagement required

Real-time context: who’s working on what, where, how, and, most importantly, why

You need all three.

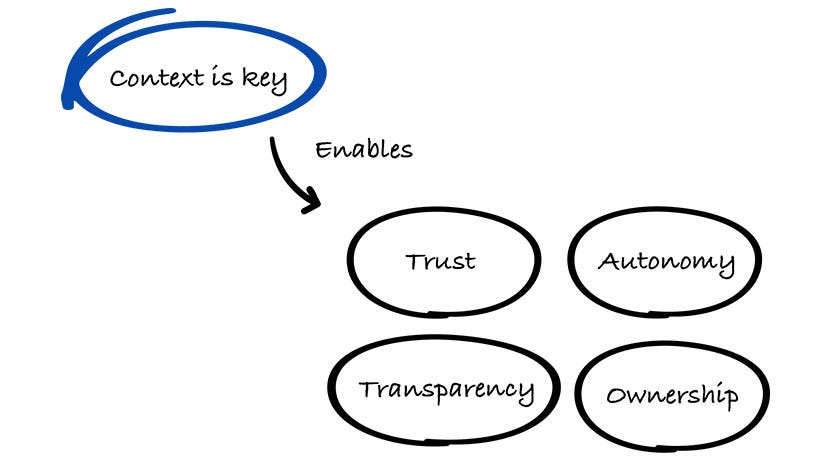

But the secret sauce in today's fragmented modern work environment is the third one: context → it’s what makes the first two possible.

Picture your last nightmare project:

Engineers building features that don't fit together, product managers chasing status updates, everyone working hard but nothing clicking.

That's what happens when context is absent and people guess at it. Trust erodes alongside poor decisions based on incomplete information. Accountability devolves into micromanagement because managers can't tell if work is on track.

And autonomy drifts into chaos because teams build the wrong things even with the best intentions. And crucially, we're all unhappy, because shared purpose is unclear.

Now picture your last breakthrough project:

That magical team flow state where the backend engineer anticipates frontend needs, the QA lead writes tests before seeing tickets, and someone catches a critical but obscure bug in code review.

Everyone moves like they're reading each other's minds because they're all working from the same rich understanding of what's happening and why it matters.

That's context in action.

When teams have real-time visibility into who's doing what and why, transparency becomes natural, trust builds organically, and people can own their outcomes without constant oversight.

And crucially: the right information, at the right time, in the right hands doesn't just make teams more efficient → it makes them happier.

A shared brain for teamwork

If context is the foundation for trust, autonomy, and accountability, the next two question are:

how do we deliver it at scale?

And if we're going to leverage genAI, what's the foundational blueprint for doing so?

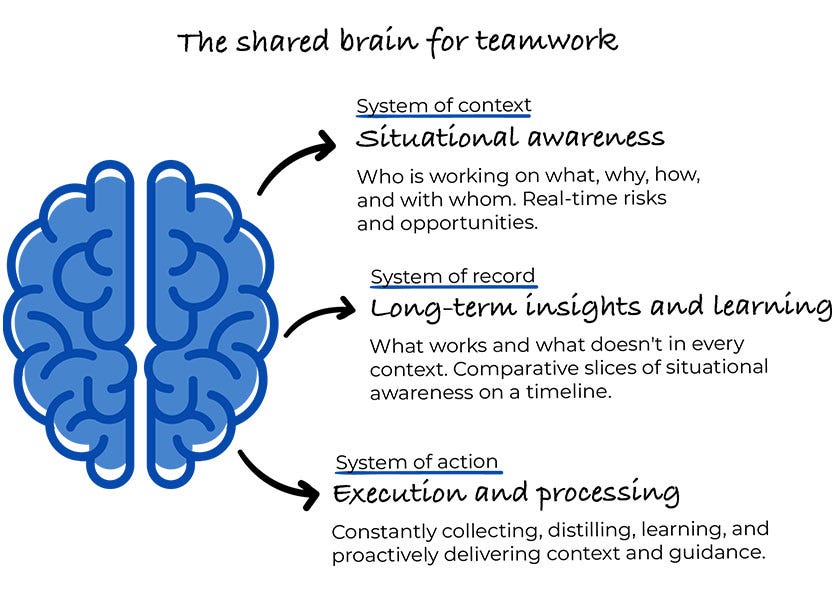

The answer isn’t yet another set of dashboards or KPIs, it’s something fundamentally different: a shared brain for teamwork.

Every high-performing team eventually develops one → a collective sense of what’s happening, why it matters, and how the pieces fit together.

But this mostly breaks down at scale. What works for a 5-person team doesn’t translate across teams of teams, time zones, and functions. Context gets siloed, decisions get lost, and alignment turns into overhead.

What if you could build that shared understanding systematically and scale context across the entire org?

A true shared brain operates on three levels:

System of context → Situational awareness: who’s working on what, why, how, and with whom. Real-time risks and opportunities.

System of record → Institutional memory: what decisions were made, why they were made, and what we learned. Slices of context over time that we can mine over time for insights.

System of action → Intelligent execution: constantly collecting, distilling, and proactively delivering relevant context to the right people, at the right time.

Together, these systems form a coordination intelligence layer that strengthens human judgment instead of replacing it.

The shared brain doesn’t make decisions for you, it keeps everyone aligned on what’s happening, why it matters, and where things are going.

Not so fast: humans are the loop

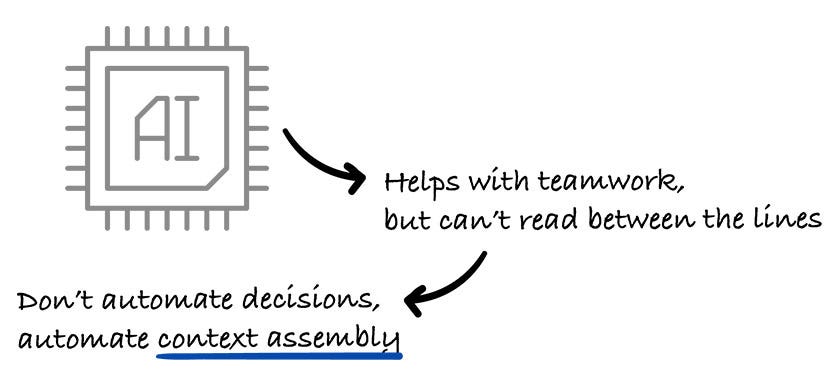

Before we look at ways to implement a shared brain, it’s tempting to imagine a future where AI simply runs the team: flagging blockers, assigning tasks, keeping everything humming.

But that fantasy misses something essential:

Teamwork and coordination isn’t an algorithmic problem. It’s a human one.

AI can help. It can assemble context, surface anomalies, and suggest next steps (see below). But it can’t read between the lines.

It doesn’t know that a late PR reflects burnout, not laziness.

It can’t sense when alignment is performative.

It doesn’t feel the stakes in a strategic tradeoff. Only humans do.

So we don’t automate decisions. We automate context assembly.

In other words, a shared brain for teamwork should make it easier for humans to make good calls, not take the calls out of our hands.

And importantly, it should introduce just the right amount of friction.

Not the drag of endless meetings or noisy dashboards, but deliberate moments of reflection and clarity. Writing. Thinking. Enough to prompt good questions, sharpen assumptions, and keep everyone aligned on reality.