Top AI Coding Tools for Engineering Teams in 2025

An opinionated guide on which AI coding tools to use and the risks associated with them!

Intro

There are many different options for using AI, both personally and professionally. It feels like every single week, a new tool is available, an LLM update is released, or something new shifts how we use AI.

It can feel daunting when choosing which tool to go for and to help us with this, I am happy to bring in Jeff Morhous as a guest author to today’s newsletter article.

Jeff is a Senior Software Engineer and a big AI enthusiast. He is also writing a newsletter called The Augmented Engineer, where he regularly shares his insights on different AI-related topics.

Today, he is sharing his overview of the top AI coding tools he recommends for engineering teams, his opinion of them, the associated risks and how they can potentially be mitigated.

Let’s get straight into it!

Jeff, over to you.

AI tools are no longer (just) toys. They're power tools

Used well, they can help your team ship faster. Used poorly (or not at all), they become a liability.

Rolling out AI at work isn’t as simple as telling your developers to “go use ChatGPT.” There are privacy implications, licensing questions, and a rapidly shifting landscape of tools that do everything from autocomplete to autonomous refactoring. Some tools are great for individuals, while others are built for teams.

This guide walks through the major AI tools your engineers are likely to reach for and shows you some of the enterprise-friendly options you can choose from + risks associated with them. Let’s get into it!

So what AI tools are worth using?

If you aren’t already using some AI tools in your day-to-day work, you probably have at least heard some names. Of course, everyone knows ChatGPT, and many are familiar with Copilot, but there are plenty more to get into. Let’s take a look at each one.

ChatGPT

ChatGPT is easily the world’s most popular AI product.

I like ChatGPT because of how great a product it is, even if the underlying model isn’t always the best choice. With a Plus subscription, you have access to GPT-4o, GPT-4.5, and more models that are on the bleeding edge.

You also get Deep Research, which in my opinion is the single most useful AI feature on the planet. If you don’t have time to wait for Deep Research, the Search function is still quite useful.

Combine this with near-infinite chat history, context windows, and custom instructions, and you have a recipe for an incredibly useful product. Using “projects” to organize chats, files, and instructions is another great way to get extra use out of the tool.

ChatGPT for work

The free version of ChatGPT gives you access to some basic models. It’s fast, decent for casual use, and works fine for things like writing boilerplate, summarizing documents, or basic coding help. But it’s also missing a lot:

No access to GPT-4 (or GPT-4o)

No file uploads, vision, or advanced tools

No data privacy guarantees

ChatGPT Plus gives you access to GPT-4o and other top-tier models, including the latest reasoning models. It also has much higher tiers for Deep Research. For $20/month per user, you unlock:

Better models

Tools like Deep Research

Custom instructions and longer context windows

Projects to organize chats, files, and settings per use case

It’s a huge step up from the free tier, and it’s what I use for personal stuff. It is still a consumer product. Your data isn’t used for training, but there are not a lot of guarantees or guardrails.

ChatGPT Teams is for small companies or departments who want more control. It includes everything in Plus and then some:

A shared workspace for managing usage and billing

Data privacy

Admin controls and some usage analytics

Higher usage limits

This tier starts at $25/user/month, and it’s a great middle ground if you’re not ready for Enterprise but want something with better data privacy than Plus.

Meanwhile, ChatGPT Enterprise is the full-blown “AI at work” option. You get:

Even longer context windows (128k+ tokens)

Full SOC 2 compliance, SSO, and audit logging

Dedicated support and deployment help

SLA-backed uptime and security guarantees

The big draw for businesses here is data privacy and compliance. Nothing your team sends into ChatGPT Enterprise leaves the walled garden. It’s isolated, encrypted, and never used for training.

If you’ve not been able to get your team's approval to have subscriptions individually expensed, this is probably the next stop.

Claude

Claude is the other big player on the AI-chat block. It’s a web-based AI tool that has a familiar chat interface but is backed by some truly bleeding-edge models.

Anthropic has done an incredible job with the Claude models, but I’m a little less impressed with the product. It’s hard to explain exactly why, but I (and plenty of others) have a preference for ChatGPT. Still, Claude, specifically 3.7 Sonnet has consistently better performance at coding than most of OpenAI’s models.

I can’t say whether ChatGPT or Claude is objectively better - I’d bet you find your team mostly split.

Claude for work

Claude Pro costs $20/month and gives you more usage, more models, and more features (like projects!). There’s even a $100/month tier with even higher caps.

Still, this is a consumer-grade product. Like ChatGPT Plus, it’s powerful, but not likely a great fit for enterprise work unless you're willing to accept some risk.

Claude Team is Anthropic’s business-tier product for smaller companies. This is the tier most companies should look at if they’re using Claude seriously. The high token limit is especially valuable for working with codebases, logs, or long-form technical documents.

Claude Enterprise offers similar data protections and features to ChatGPT Enterprise, and is a better fit for bigger companies or those subject to heavy data regulations.

Copilot

GitHub Copilot pioneered the AI-in-the-editor tool category. When Copilot came out, I was genuinely shocked.

As a simple VS code (or your editor of choice) extension, Copilot started as just fancy autocomplete, but has evolved with more features such as Chat, Copilot Edits, and Agent, all of which help you get more work done in less time.

Though Copilot was the first to the space, competitors such as Cursor and Windsurf have mostly eaten its lunch. Most developers have a preference for one of those tools over Copilot.

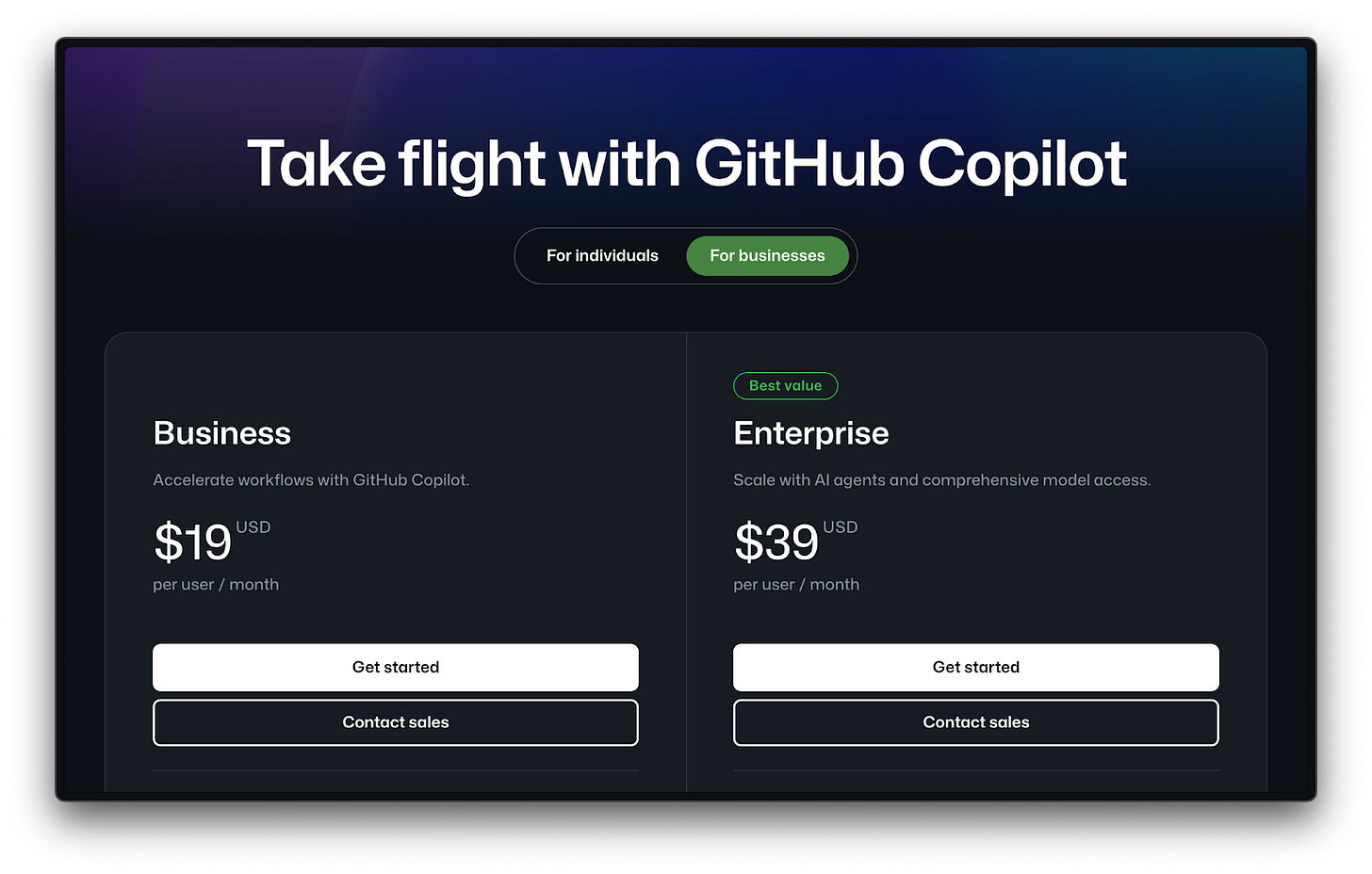

CoPilot for work

Copilot Business is $19/user/month and is aimed at companies that want to roll it out at scale but have control over usage and data. It includes:

Unlimited agent mode and chats with GPT-4o

Unlimited code completions

Access to code review, Claude 3.5/3.7 Sonnet, o1, and more

300 premium requests to use the latest models per user, with the option to buy more

User management and usage metrics

IP indemnity and data privacy

This plan addresses the biggest concern most companies have: sending proprietary code to Microsoft. With Copilot Business, code stays private, and Microsoft guarantees it won't be used to improve the model.

Copilot also has an enterprise tier here that comes with even higher limits.

It’s worth saying that I’ve used Copilot extensively and found it much less useful than Cursor or Windsurf, even with the recent rollout of agent mode.