Biggest Mistakes Engineering Leaders Make With AI

🎁 Notion Template: AI Communication Cheat Sheet for Engineering Leaders included!

Intro

Engineering leaders are facing a lot of challenges when it comes to AI. Especially as there are many unrealistic expectations coming from stakeholders, company leaders and also from known individuals via articles and videos.

This has shown to be one of the main reasons that engineering leaders believe that AI is impacting the industry negatively.

It’s really important for engineering leaders to be able to manage these expectations correctly and ensure that AI is being adopted correctly and not make irrational decisions.

To help us with this, Hamel Husain, ML Engineer with over 20 years of experience helping companies with AI, is sharing the 4 biggest mistakes he sees engineering leaders make with AI.

This is an article for paid subscribers, and here is the full index:

- Navigating the AI space can be overwhelming

- Mistake #1: Taking Advice From People Who Rarely Work With AI

- “Perfect” is Flawed

- When You’re Not Hands-On With AI, It’s Hard to Separate Hype From Reality

- Mistake #2: Focusing on Tools over Processes

- Improvement Requires Process

- Rechat’s Success Story

- This Is What You Should Be Asking Your Team

🔒 Mistake #3: Using Buzzwords and Jargon when it comes to AI

🔒 Why Simple Language Matters in AI

🔒 Examples of Common Terms Translated

🔒 🎁 Notion Template: AI Communication Cheat Sheet for Engineering Leaders

🔒 Strategies for Promoting Simple Language in Your Organization

🔒 Mistake #4: Incorrectly Hiring an AI Team

🔒 The AI Specialist Trap

🔒 A Smarter Hiring Progression

🔒 Hiring the Right Way

🔒 Last words

Introducing Hamel Husain

Hamel Husain is an experienced ML Engineer who has worked for companies such as GitHub, Airbnb, and Accenture, to name a few. Currently, he’s helping many people and companies to build high-quality AI products as an independent consultant.

Together with Shreya Shankar, they are teaching a popular course called AI Evals For Engineers & PMs. I highly recommend this course to build quality AI products. I’ve personally learned a lot from it.

Check the course and use my code GREGOR35 for 1015$ off. The cohort starts on July 21.

Navigating the AI Space Can Be Overwhelming

There’s a significant disconnect between those building AI products and people who are making decisions about them.

There’s a sea of advice on how to create effective AI products, but much of it comes from sources that aren’t hands-on with the technology.

Without practical experience, it’s challenging to separate fact from fiction and make informed decisions.

On the flip side, AI practitioners who are in the trenches often struggle to communicate their knowledge in a way that resonates with executives.

They tend to think and speak in technical terms, which can be off-putting or confusing for those without a deep technical background.

In this article, we are going to go through the biggest mistakes engineering leaders make when it comes to AI. Let’s start with the first one.

Mistake #1: Taking Advice From People Who Rarely Work With AI

We are all bombarded with articles and advice on building AI products.

The problem is, a lot of this “advice” comes from executives who rarely interact with the practitioners actually working with AI. This disconnect leads to misunderstandings, misconceptions, and wasted resources.

An example of this disconnect in action comes from an interview with Jake Heller, CEO of Casetext.

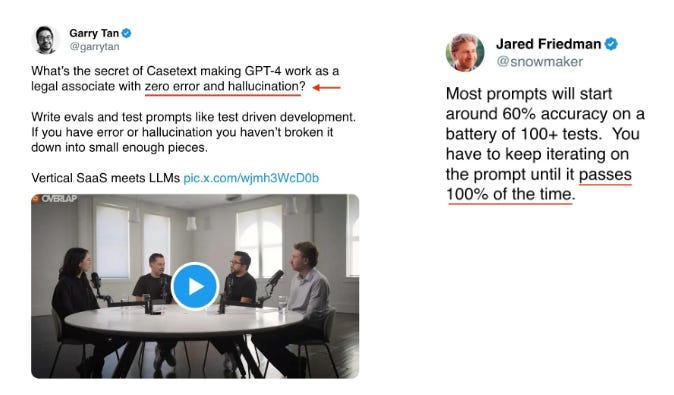

During the interview (you can find the clip here), Jake made a statement about AI testing that was widely shared:

One of the things we learned, is that after it passes 100 tests, the odds that it will pass a random distribution of 100k user inputs with 100% accuracy is very high.

This claim was then amplified by influential figures like Jared Friedman and Garry Tan of Y Combinator, reaching countless founders and executives:

The morning after this advice was shared, I received numerous emails from founders asking if they should aim for 100% test pass rates.

If you’re not hands-on with AI, this advice might sound reasonable. But any practitioner would know it’s deeply flawed.

“Perfect” is Flawed

In AI, a perfect score is a red flag.

This happens when a model has inadvertently been trained on data or prompts that are too similar to tests. Like giving a student the answers before an exam, they will look good on paper but be unlikely to perform well in the real world.

If you are sure your data is clean but you’re still getting 100% accuracy, chances are your tests are too weak or not measuring what matters.

Tests that always pass don’t help you improve; they’re just giving you a false sense of security.

Most importantly, when your models have perfect scores, you lose the ability to differentiate between them. You won’t be able to identify why one model is better than another, or strategize how to make further improvements.

The goal of evaluations isn’t to pat yourself on the back for a perfect score.

It’s to uncover areas for improvement and ensure your AI is truly solving the problems it’s meant to address. By focusing on real-world performance and continuous improvement, you’ll be much better positioned to create AI that delivers genuine value.

When You’re Not Hands-On With AI, It’s Hard to Separate Hype From Reality

Make sure to keep this in mind:

Be skeptical of advice or metrics that sound too good to be true.

Focus on real-world performance and continuous improvement.

Seek advice from experienced AI practitioners who can communicate effectively with executives.

Now, let’s take a look at what, in my opinion, the biggest mistake engineering leaders make when investing in AI.

Mistake #2: Focusing on Tools over Processes

One of the first questions I ask engineering leaders is how they plan to improve AI reliability, performance, or user satisfaction.

If the answer is “We just bought XYZ tool for that, so we’re good,” I know they’re headed for trouble.

Focusing on tools over processes is a red flag, and the biggest mistake I see engineering leaders make when it comes to AI.

Improvement Requires Process

Assuming that buying a tool will solve your AI problems is like joining a gym but not actually going.

You’re not going to see improvement by just throwing money at the problem. Tools are only the first step. The real work comes after.

For example, the metrics that come built-in to many tools rarely correlate with what you actually care about. Instead, you need to design metrics that are specific to your business, along with tests to evaluate your AI’s performance.

The data you get from these tests should also be reviewed regularly to make sure you’re on track. No matter what area of AI you’re working on, model evaluation, RAG, or prompting strategies, the process is what matters most.

Of course, there’s more to making improvements than just relying on tools and metrics. You also need to develop and follow processes.

Rechat’s Success Story

Rechat is a great example of how focusing on processes can lead to real improvements.

The company decided to build an AI agent for Real Estate agents to help with a large variety of tasks related to the different aspects of the job. However, they were struggling with consistency.

When the agent worked, it was great, but when it didn’t, it was a disaster.

The team would make a change to address a failure mode in one place, but end up causing issues in other areas.

They were stuck in a cycle of whack-a-mole. They didn’t have visibility into their AI’s performance beyond “vibe checks” and their prompts were becoming increasingly unwieldy.

You can find the full case study here:

By focusing on the processes, Rechat was able to reduce its error rate by over 50% without investing in new tools!

This Is What You Should Be Asking Your Team

Instead of asking which tools you should invest in, you should be asking your team:

What are our failure rates for different features or use cases?

What categories of errors are we seeing?

Does the AI have the proper context to help users? How is this being measured?

What is the impact of recent changes to the AI?

The answers to each of these questions should involve appropriate metrics, and a systematic process for measuring, reviewing, and improving them.

If your team struggles to answer these questions with data and metrics, you are in danger of going off the rails!