How to Measure AI Impact in Engineering Teams

This is how you should approach measuring AI impact, and these are the metrics to track!

Intro

“AI can improve Software Development productivity by 100x.” → This is one of the hype-creating statements that we hear a lot in our industry.

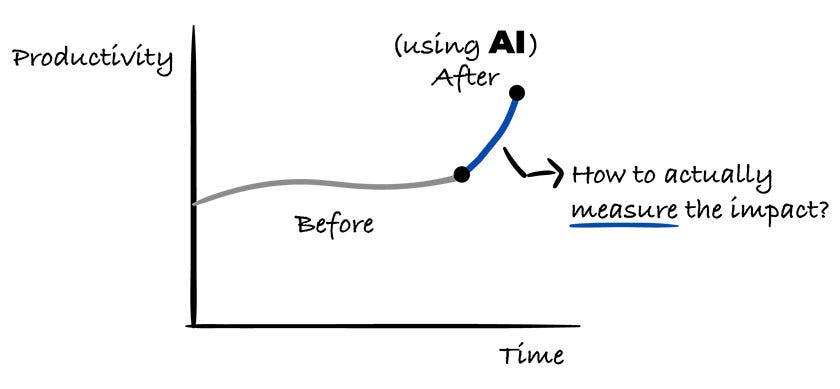

But nobody really knows what 100x really means and how to actually measure that.

They just mention some artificial numbers, based on their “feeling” and are not backed by any data. This is the reason why so many engineering leaders perceive AI in a negative way.

I’ve spoken to many different engineering leaders recently and in most cases, I’ve heard the productivity increase spanning from 0.3 - 1x increase.

But, how can we actually measure the productivity increase of AI tools?

Lucky for us, we have Laura Tacho with us today as a guest author. She’ll be sharing how we can approach measuring the impact of AI tools and which metrics to track.

P.S. This is our second collab with Laura, you can check the first one here: Setting Effective Targets for Developer Productivity Metrics in the Age of Gen AI.

Introducing Laura Tacho

Laura Tacho is the CTO at DX, the developer intelligence platform designed by leading researchers. She is one of the people that I think highly of when it comes to measuring developer productivity.

Laura and DX CEO Abi Noda will be hosting a live discussion on July 17 to dive deeper into the AI Measurement Framework covered in today’s article.

They’ll walk through the key metrics for measuring the impact of AI code assistants and agents, and share data from DX on how organizations are adopting these tools and what impact they’re actually seeing.

AI tools are rapidly entering engineering workflows

From Copilot to Cursor, ChatGPT, and Claude, developers are increasingly turning to these tools for code generation, debugging, and implementation support. Adoption is no longer the question → the real challenge now is understanding impact.

Leaders are under pressure to justify investments, guide adoption, and stay competitive. But the metrics available today are often shallow or misused.

We’ve all seen the headlines: “AI is writing 50% of our code.” On the surface, those numbers are impressive. But under the hood, they often count suggestions accepted, not whether the code was modified, deleted, or ever made it to production.

For leaders trying to benchmark themselves against those claims, the reality is that they’re largely meaningless.

At the same time, teams on the ground are eager for direction. They want to know where to invest time, which workflows to prioritize, and how to level up their usage.

Without reliable data, teams are left flying blind and unsure whether AI tools are helping, hurting, or simply adding noise.

This disconnect is creating a measurement gap.

AI tooling is evolving fast, but our frameworks for understanding and managing their impact haven’t kept pace. That’s why organisations need a structured, research-backed approach for measuring AI adoption, not just for reporting purposes, but to guide better decisions.

The goal isn’t just to track usage but also to understand how AI is transforming work, where it’s creating leverage, and where it might be introducing risk. That starts with using the right metrics.