OpenAI's Report: The State of Enterprise AI

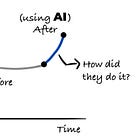

There has been a big increase in enterprise usage in the last 12 months!

This week’s newsletter is sponsored by Cerbos.

One size does not fit all: A guide to multitenant authorization

When an enterprise signs up for a SaaS product, the “Admin, Editor, Viewer” model breaks. Role explosion follows - thousands of tenant-specific role variants that cripple engineering velocity and stall enterprise deals.

Static roles weren’t designed for the complexity of today’s multitenant systems. Every enterprise has unique organizational structures, matrix reporting, and regional compliance requirements that demand contextual, granular permissions.

This ebook shows you how to implement dynamic, tenant-aware authorization that scales. Without role proliferation. Inside the ebook, you will find:

Why fixed roles break at enterprise scale (and what role explosion actually looks like)

How to implement authorization that mirrors each tenant’s organizational reality

Architecture patterns for separating platform-wide rules from tenant-specific policies

How to balance central control with tenant self-service and delegated administration

The PEP/PDP/PAP pattern and policy-as-code workflows

Real examples from leading SaaS companies scaling authorization across thousands of tenants

Thanks to Cerbos for sponsoring this newsletter, let’s get back to this week’s thought!

Intro

Ever since the increase in popularity of AI tools → many people have been doubtful regarding security, sharing sensitive data, and overall compliance factors regarding the usage of such tools.

This has been especially prevalent in enterprise companies, as many have previously chosen to restrict such tools. But now we have the latest data on how enterprise companies are using AI tools, and one thing is clear:

A lot more enterprise companies are using AI tools these days.

On December 8th, 2025, OpenAI published their report on the state of enterprise AI, and I’ve taken a close look at it. Here is also the PDF of the report.

Some interesting insights:

Users report saving on average 40–60 minutes per day using AI tools

Australia, Brazil, the Netherlands, France, and Canada are above the global average in the number of paying business customers of OpenAI (Australia by far in the first place)

ChatGPT message volume grew 8x and API token consumption per organization increased 320x year-over-year (from November 2024)

In today’s article, I am going over the report and sharing my thoughts on the data.

If you like these kinds of articles, where I go over a highly relevant report and share my insights on it, you’ll also like these articles:

Let’s start!

OpenAI surveyed over 9000 people from various fields in 100+ enterprise companies

They have also shared their results from the real-world usage of data from enterprise customers.

Based on my research, OpenAI launched its ChatGPT Enterprise plan in late August 2023, so they have the data for the past 2.3 years.

One unfortunate thing that we don’t see -> the questions of the survey and how they were framed + what options people choose from. That would bring a bit more clarifying thoughts on the results.

But no matter, we got a lot of interesting data to go through. Let’s go straight to the first set and one of the most important → Enterprise usage.

1. Enterprise Usage is Increasing

As mentioned in the Intro section, there has always been a question mark in terms of enterprise usage of AI tools. But now we have the data.

Since November 2024:

ChatGPT messages on the Enterprise plan have increased by 8x

The number of seats on the Enterprise plan have increased by 9x

These two increases tell me that many enterprise companies are making AI part of their core workflows.

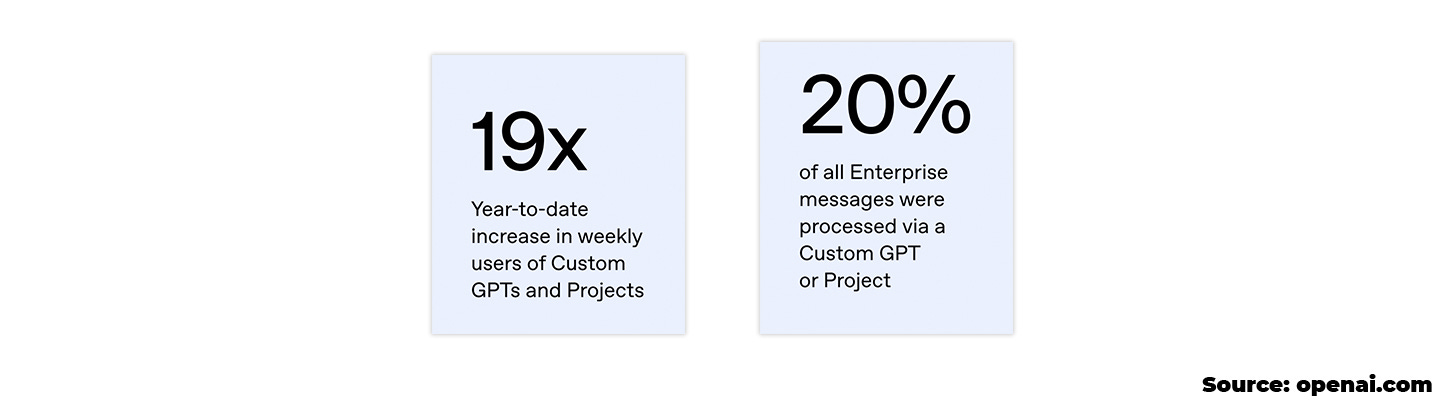

1.1 Increase in Custom GPTs and Projects

Weekly users of Custom GPTs and Projects have increased by 19x from November 2024, and an interesting data point is that 20% of all the Enterprise messages were processed via Custom GPTs or Projects.

In the report, it’s mentioned that many enterprise companies have developed a culture where they put the domain knowledge of a certain topic in a custom GPT and share that with everyone, so they can ask any questions and get all the info that they need.

They also added an example from a company called BBVA (a Spanish multinational bank), where they created more than 4,000 GPTs, which indicates that the company has widely adopted the workflow that we mentioned above.

1.2 Big Increase in Reasoning Token Consumption

Also mentioned in the report is that more than 9,000 companies have exceeded the 10 billion tokens, and nearly 200 have exceeded the 1 trillion tokens. Unfortunately, we don’t get the before/after comparison on the overall token usage.

But we get the before/after comparison on the usage of reasoning tokens (tokens that are being used for more advanced models and used by the model to think before responding).

The increase in reasoning tokens usage has been approximately by 320x (from November 2024), which tells me that there’s a lot more usage of more advanced models, instead of just quick and simple asks.

2. How AI Impacts Productivity

Before we go through the data, it’s important to mention that if you are an engineer or engineering leader, these 2 articles have some good insights on how you can increase your productivity using AI:

Now, let’s go through the data on productivity.